Intercom on Product: Product strategy in the age of AI

As new AI-native startups and industry giants navigate the AI revolution, the product landscape is undergoing a profound transformation. Can businesses seize the potential of this disruptive force to drive innovation and thrive in today’s competitive market?

In the past few years, and particularly since the launch of ChatGPT last November, we’ve witnessed a boom in generative AI that has pushed the boundaries of creativity and innovation – and has also begun to upend industries in ways we could have barely imagined. From text to audio and imagery, these latest AI capabilities have already sparked a new generation of AI-native startups with workflows entirely powered by AI, and inspired countless others to develop or adopt AI-powered features and products.

The applications are endless – UX, UI, content creation, data analytics, customer service, sales prospecting, marketing automation, you name it. Now that the first wave of dust has settled, it’s an ideal time to reflect on what these changes mean for product strategy and product leaders. Whether you’re a product manager, a domain expert with decades of experience, or a fresh-faced startup founder, these times bring not only new challenges but also game-changing opportunities. Will AI help people amplify their productivity and expand to new markets, or will it render certain roles obsolete? Will startups armed with innovative AI approaches succeed in disrupting well-established categories? And will incumbents be able to keep up with the relentless pace of innovation?

In today’s episode of Intercom on Product, I sat down with Paul Adams, our Chief Product Officer, to talk about product strategy in the age of AI.

Here are some of the key takeaways:

- To truly disrupt categories with AI, startups must consider whether their products or features offer a unique attack angle that incumbent companies cannot easily replicate.

- While AI can streamline tasks in SaaS categories like sales and customer service, offering relief from repetitive work, the impact on project management is more nuanced.

- As AI capabilities advance, people will likely become more comfortable relying on it for tasks that involve not just analysis but judgment – albeit still with a need for human oversight.

- When considering new capabilities such as AI, product managers should focus on how they can expand the user base, enhance users’ abilities, or eliminate tasks entirely.

- Whether you’re a startup or an established company, it’s a good time to brush up on the ideas behind The Innovator’s Dilemma.

If you enjoy our discussion, check out more episodes of our podcast. You can follow on Apple Podcasts, Spotify, YouTube or grab the RSS feed in your player of choice. What follows is a lightly edited transcript of the episode.

Bet the farm

Paul Adams: Hey, everyone, welcome to Intercom on Product. I’m Paul Adams, and with me today, as always, is Des.

Des Traynor: Hey Paul. How are you doing?

Paul: All right, today we’re going to talk about AI and product strategy. We’re going to talk about what that means for people in a range of different positions on this. We feel it’s a great time to talk about it because the first wave of dust has settled. We’ve seen what’s possible from this kind of first wave of companies, and like any big technology, it’s unclear to people at the start how it’s all going to pan out. When you look at the landscape today, we have people who are all in, and they’re like, “Bet the farm; bet the company.” And then you’ve got people who are still a little unsure: “Is this really a big deal? Is this more Silicon Valley Kool-Aid?” Des, where do you think you are on it?

“When you look at some of the capabilities, I’m pretty certain that whole industries and categories of software will be upended”

Des: I am definitely in. Bet the farm, bet the company, bet the Kool-Aid, go to your neighbors, and bet their farms. I think it’s huge. I understand the cause for skepticism because it does seem to have conveniently arrived at a time when Silicon Valley and investors were gagging for something new to talk about. But when you have the experiences that AI is delivering at the moment, it’s quite clear something massive is happening, and we’re still in this sort of embryonic stage of seeing it. As you mentioned, the dust has settled. It really is the first wave of dust. We are now starting to see whole companies getting a series A or B off the back of being an AI-native applied company.

When I talk about this, what I mean is not OpenAI or Anthropic who are providing the actual AI, but people who are building whole workflow products that are entirely powered by AI. As in, if OpenAI and Anthropic didn’t exist, this company also wouldn’t exist. People really leaning on it as a platform. When you look at some of the capabilities, it’s a straight-line certainty for me that whole industries and categories of software will be upended.

Paul: Sometimes, in tech, we talk about extinction events. Mobile came along, and mobile-first companies killed companies that weren’t mobile-first and couldn’t adapt. Prior to it was the same with cloud-first companies. Do you think this is an extinction event-type thing?

Des: I think in certain pockets, certainly. And in a lot of other pockets, if it’s not an extinction event, it’s because of one new dynamic. In some of these areas, let’s say, with an open AI server, the power is accessed through an API, like, “Hey, summarize this 5,000-word incident for me,” pinging that over to a third party, and getting back the response. That is not the same as rebuilding your entire company to make it iOS-native. So, as a result, there will be areas of software where I think incumbents will actually make use of this and get a lot of value. Some areas will be extinction events, but it’s not like an asteroid, it’s not going to take out the whole industry. I think you’re going to see a lot of the big companies actually get bigger.

“If we go back to November 29th, when we saw ChatGPT 3.5, what became obvious, or at least the first thing we saw was that this thing was very, very good at being conversational”

Paul: Yeah. Which obviously happened with mobile. Google and Facebook eventually did figure out how to do it.

Des: They did, that’s right. They figured out to do it quicker than anyone could figure out how to be great at, say, search. We will come back to this idea of a ratio in a second, but learning Objective-C and deploying an Objective-C or iOS-powered interface on a mobile phone to an insanely powerful search engine – it turns out that the hard bit of all that is the insanely powerful search engine. It’s that ratio of how much new work we have to do vs. how much of the incumbent work is still valid? Google’s backend is still extremely valid, and the front end might change, but it turns out that crawling the entire internet is not something that two randos falling out of YC can do in one evening.

Paul: Let’s talk about both sides of that. There are table stakes features – the core features a product needs in a certain category. Then there are new things it can do and new technologies enabling things. Let’s start with the new things that AI can do. You have a whole list of things that make you bullish on it.

Des: That’s true. If we go back to November 29th, when we saw ChatGPT 3.5, what became obvious, or at least the first thing we saw was that this thing was very, very good at being conversational. It was very, very good at understanding humans and very, very good at replying back. It took prompting and instruction really well, and it was very good at basic text wrangling: expand upon this, summarize that, rephrase this, re-tone that.

It was also very, very good at deduction or inference. You could give it a complex scenario and ask, for example, “If somebody is struggling with a long-term illness inside a burning building, which is the bigger issue here?” And it worked out answers to those questions. To humans, these things sound insanely simple. But to get a machine to actually understand it, make an inference, and suggest an action is quite powerful. Or, “Given the state of this project based on all the updates you’ve read, what do you think the most important issue is?” And it’ll actually do a really good job on that. So the idea of deductive or inductive reasoning is pretty powerful there as well.

“I think people don’t realize how much this has a way of creeping into your normal life”

And we’re just talking about the text domain. We saw DALL-E and DALL-E2 have the ability to, given a piece of text, render an image, and it was getting insanely good. Now, the latest Midjourney stuff is just breathtaking.

People often ask, why is that useful? Well, there are loads of scenarios where people aren’t creative, but they know what they want. So, I’d like to send this email, and I’d like it to be sent in a light thin font on a dark textured background. And it can give you 27 versions of that on the screen. All of a sudden, people who can’t do art can do art, right?

Being able to generate imagery is not to be sniffed at. A lot of these things get typified by the funny use case, “Show me a cheeseburger eating a planet,” and it does a really good job of that. But I guarantee you, “Give me a really nice header background for my new website” is going to be a cool feature in Squarespace or Wix or something like that.

We have voice. This has been coming along. There’s both the ability to parse voice – pretty much real-time audio transcription. And it can generate voices as well. That’s the latest breakthrough in AI. So if you look at, say, Synthesia or Play.ht, you can give it Mission: Impossible shit. You give it 90 seconds of you speaking, and it’ll make a passing impression of you for a single sentence. Give it an hour of you speaking, and it starts to get it. You could certainly get away with it.

“You couldn’t pressure me into being an AI skeptic at this point”

And then generating video. Synthesia does this fake video avatar thing where you can record yourself and some mannerisms of you, and it’ll be able to make it look like you’re talking. But we’re going to be able to generate full-on video in the same way we can generate imagery.

When you think about all these categories, I think the mistake I was making initially, and a lot of folks make initially, is think, “Right, that sounds really important. If I’m working in Adobe, I should be all over this.”

I think people don’t realize how much this has a way to creep into your normal life. That voice tech can literally be the thing that will power the future of messaging or the future of product interaction, where you just talk to your product while you’re driving or whatever. All of that is now possible. And similarly, the imagery isn’t just “hot dogs eating planets.” It can literally design an entire background and re-skin this product I’m using to look prettier.

I could keep going with other cool stuff that’s now possible. But when I look at the collective weight of all of that potential, and I think about its applications to specific software domains to creativity, to UI, to how humans might interact with other humans, to what jobs could be automated, and what parts of jobs could be automated, you couldn’t pressure me into being an AI skeptic at this point. It’s not possible. It’d be like trying to push back the tide. It’s pretty obvious to me that massive transformations are coming, and you’re better off getting on the right side of them.

Taking on giants

Paul: I mean, I’m there too. In some of the things you said there, like imagery, for example, the entire advertising industry would probably be turned upside down. Certainly, if you work in a creative or media agency. I know people who work in a creative agency already using AI to generate all or most of their work.

Let’s talk about the other side of it. You mentioned some startups I haven’t heard of before. It’s just an explosion. I don’t think anyone could keep up with all the new types of things built on this new generation of technology. Meanwhile, you have huge companies, hundreds of millions of dollars in revenue, who’ve built a business over one or two decades. In the early days of Intercom, we were a bit naive. We were coming in like “hot startup taking on the incumbent,” giant killing-type mindset.

Des: “We’re going to kill Salesforce.”

Paul: Yeah, chip on the shoulder, giant killer, right? Then you realize, ” Oh.” An area like reporting and stuff, you’re like, “Oh, this is a big, deep thing.”

Des: Yeah. These guys are big for a reason.

“You really have to say, ‘Hey, I think if this area was to be built again today, you’d do it fundamentally differently'”

Paul: Years of product development are required just to have the table stakes. How do you think companies should think about that?

Des: I think you can look at this from both sides. Let’s say you’re a scrappy startup, and you’re picking an enemy. If you say, “Let’s go after Workday,” what is the attack angle on Workday that AI permits? Well, you look at all the capabilities that we have. You could try and generate performance reviews and try and parse that sort of stuff.

But ultimately, let’s say you find a few examples where you can sprinkle and dot in bits of AI magic to simplify existing workflows. I think anyone who’s used Workday would have to admit… I don’t think anyone gives a shit about the complexity of the workflows inside that company. That’s not their ROI. That’s not the reason people buy Workday.

The reason people buy Workday is, I think, because it’s the largest ERP for humans you could imagine. They have a massive enterprise sales team. They’ve built a huge brand of, “We are the final boss when it comes to HRIS systems”, and that’s what they care about.

Paul: And almost infinite configurability.

Des: Yeah. The question then becomes, if you were to rebuild all this in an era of AI, what would change? If people are buying extreme configurability, it’s not obvious to me that the attack angle is there. I think people are buying a glorified WYSIWYG to a database where they can connect thing to thing by manager relationship and say, “Thing has report; thing has home address; thing has salary.” I don’t think any of that really changes massively in the near term. You could have a far more beautiful Workday that’s AI-powered. I just don’t think anyone would give a shit. You’d be duking it out with other series A or B startups who are probably more mature than you.

“Your AI might be amazing at fraud detection, even better than Stripe’s AI for fraud detection, but that’s probably 15% of the puzzle”

But to give you a sexier example, if you and I say, “Hey, we’re going to go kill Stripe, but we’re going to use AI.” Job one, you start working the AI, I’m going to stick on a suit and meet with seven banks and Visa and MasterCard to see if I can get permission to charge credit cards. That is the actual task. Then, how do I go and build a brand that people trust? Yeah, your AI might be amazing at fraud detection, even better than Stripe’s AI for fraud detection, and your AI might be amazing at detecting the right optimal pricing points for B2B SaaS companies. But that’s probably 15% of the puzzle. The other 85% of the puzzle is where I’m 10 years behind Stripe, which is chasing banks.

If you’re a startup, you have to believe in the following things. One is, if you were to build this entire product category from the ground up today, given what’s now possible with this AI revolution, would you do it substantially differently? How much of the incumbent products’ technology is still relevant in the future? If it’s a very, very small amount, maybe their login system and shit like that, yeah, there’s blood in the water. Get going.

However, if we take, say, MailChimp, and we’re going to use AI to write the emails and style the notes, that’s cool. Most people like MailChimp because they have a really high deliverability rate or email newsletter analytics and list management and subscription management, and they’ve got spam detection and all that sort of shit. You have to build all that. And while you’re building all that – let’s say that’s 30 months’ worth of work – MailChimp will probably work out how to build your little AI features. Then you have what they have, but they still have a far more mature and well-known brand. The one big differentiator you were bringing to the party, they now have. This is especially true if the core engine of differentiation is actually on the other end of an OpenAI API call. Because in that world, I’m sure they’ll work out the prompts, too. That’s the startup angle. You really have to say, “Hey, I think if this area was to be built again today, you’d do it fundamentally differently.”

“Maybe the AI learns, so in order to justify its own value, it spits out a PDF to you every now and then to make you feel like you’re doing your job”

I’ll give you an example. There are many products that you connect to all of your different advertising platforms. They kind of house all of your central advertising inventory and run analytics. They’ll tell you things like, “Hey, our most effective ads are the following, and we’re going to run A/B tests of this one against that one.” You can go in and configure and tweak and re-upload new versions and all that sort of stuff. Then you can look at charts and dashboards to show your boss that say, “Okay, I’m doing a great job here.” I think that the entire product category would be built entirely differently today. The idea would be to ask the AI to generate the ads, run the ads, measure the LTV/CAC of the ads, suggest all of the different bake-offs and A/B tests, and optimize the ads per channel per person. It would just run all that in the background.

When I think about a product like that, I don’t even know what the interface is. It could be one of those shell scripts you just run and never actually see what happens in the background. You just trust in the lords that the money’s going to start coming in. Maybe the AI learns, so in order to justify its own value, it spits out a PDF to you every now and then to make you feel like you’re doing your job. But with that type of product category where it’s like “create, optimize, explore, exploit, iterate,” all of those tasks are individually doable.

If you’re sitting in one of these companies today going, “Oh, shit, maybe Des has a point,” the temptation is to say, “Well, let’s just do one of them.” But the reality is that the actual future is going to be doing all of them, and they’ll all be knitted together. You’ll convince yourself that, “Hey, surely no one’s going to automate all this.” But when you see how good the reasoning of GPT-4 is, it’s not obvious to me why a human would want to log in here every day and eyeball a list and see the red flashing number and be like, “Let’s turn that ad off,” or “Let’s generate 10 versions of this bright green one because it seems like it’s really good.” All of those decisions can be made by AI. I think that’s an example of a massive startup opportunity that is worth pursuing.

Ripe for transformation

Paul: There are some good questions for a startup, say, to clearly understand the actual business they’re trying to attack and what customers care about and value. Is it the kind of front-end stuff, which is much easier for us to see and recognize and think about? Or is it actually, in Workday’s case, the backend stuff? Or, in Stripe’s case, the regulation or the lawyers? I think those are good questions that you and I have talked about that are very useful for bigger companies to think about whether or not they have an opportunity to be legitimately attacked by a startup.

Before that, though, you touched on different categories, and I think we have a couple here that we should go through because they make concrete for me, and I’m sure for other people too, how things might change. For example, you mentioned multimedia things like video and voice and so on. With SaaS, though, there are a whole bunch of categories – sales tools, project management tools, reporting. Let’s start with sales. Today, lots of companies hire salespeople and spend a shit ton of money training them. How do you think that would change?

“Looking at a list – AI can do it. Lead scoring the list – AI can do it. Emailing these people – AI can do it. Targeting specific testimonials, use cases, and sales decks to this person in this industry – AI can do it”

Des: Every aspect, I think, is susceptible to significant change. The training of salespeople can now be the AI live in the call providing real-time updates on, “Hey, they asked about pricing. Here’s pricing,” and, “Hey, they asked about this. Here’s the slide. Here’s the video to play. Here’s the customer to reference. Here’s the testimonial.” All of your training is going to be much more in-ear versus, “After this call, Johnny, we’re going to sit down and talk to you about all the things you should have said.” It’s much more in the moment. That’s just training. That’s before we can get to your desk.

One role of sales is prospecting. There’s a list, we’re going to go through this list, try to find people who are credible, try and make contact with them – ring them, email them, or maybe target ads against their specific email address, so hopefully, we can follow them around the internet. I haven’t said a single thing that a human needs to do. Look at this list – AI can do it. Lead scoring this list – AI can do it, whether that’s directly or by API-ing over to a ZoomInfo and getting a lead score back. Email these people – AI can do it. Call these people – AI can do it. Target specific testimonials, use cases, and sales decks specific to this person in this industry – AI can do it.

That’s one example. There are companies like Regie.ai and Nooks looking at real specific value points in the sales workflow and saying, “Right, draw a line around this. We can do all of that.” And by the way, that’s awesome news for salespeople. A lot of the undifferentiated heavy lifting will get taken away, and everyone’s paths to being what they want it to be, which, I presume, was either senior sales leader or a senior sales rep dealing with higher deals at higher values, it’s almost like we’ve taken away a lot of the training courses and said, “Hey, it turns out no one needs to do any of that shit anymore, so let’s get you into the mixer straight away.”

Paul: There are two categories of things. One is for some people, like sales – it’s the same job selling, but AI will make the job much easier.

Des: And more fun as well.

Paul: And more fun, for sure. The other category of things is where people’s jobs might change. Project management is another category where people’s jobs will probably change because of AI.

Des: I think so. Project management is quite nuanced. I think this is the one area where you’re seeing a lot of AI applied, and a lot of it is what I call condiment-style AI. It’s like salt and pepper. It’s not the dish – it’s just a little bit of cute shit on top. But I’m wary of the whole “write the first sentence of a status update and press tab to expand,” where it’s like, “I think this project is on course,” tab, “But the following risks remain.” I’d rather that was actually coming out of your head than GPT inferring it because I need you to stand over it. You putting your name against it actually tells me that you professionally think I’d be paying you to understand these things. So I do worry a little bit that sometimes you might get overuse in these areas.

“Rather than logging in every day, you’re just going to be told if shit’s ever going wrong: `Why is this project running late?'”

Think about something like an Asana or Jira or Basecamp, and say, “How could AI help?” Again, it comes back to, “Let me know what’s going on in this project.” I think AI can do that. You can basically ask GPT-4 to say, “Read all the most recent threads, append that to your most recent knowledge, and see the semantic differences that an executive would care about to the status of this project and if it’s still on course, and send me that every day as a Slack message.”

And again, we’re moving away from the UI to just being a push versus a pull. Rather than logging in every day, you’re just going to be told if shit’s ever going wrong. “Find the root cause of all these issues. Why is this project running late?” Maybe other stuff like, “Who has contributed the most to this project in terms of making concrete decisions? What was the biggest reason this project was late?” There’s a lot of stuff there that can actually change where I think the current workflow for trying to work this out is honestly, and you’ve probably had to do this now and then, to sit down and read four Google docs and three Basecamp posts or whatever to try and work out what happened when you were away.

“Personally, I’m a little allergic to the ‘tab to complete massive paragraphs of writing and judgment’ because I prefer if that actually comes from someone’s brain”

Paul: It doesn’t even matter to me. You know? A lot of stuff has happened, a decision was made, we’re good with the decision, and the context is actually unnecessary.

Des: Yeah, yeah, totally. But sometimes you’re almost just digging for the decision, right? Imagine a world where you can log in and say, “I’ve logged in to Basecamp today because I need to work out if we are on track for August 11th or whatever. Obviously, we’re not on track for that, given it’s almost the 31st. Being able to get to that level of, “Here’s the thing I want to know and the words don’t really matter,” can be quite powerful. I have yet to see that done well, but I suspect it will happen. The nature of a PM tool will change from that point of view. Identifying conflict resources and stuff like, “Hey, Paul’s across these seven things, and he’s actually booked to be here,” could be pretty useful, too. So I think, in general, the PM’s tool is definitely ripe for it, but personally, I’m a little allergic to the “tab to complete massive paragraphs of writing and judgment” because I prefer if that actually comes from someone’s brain, at least right now.

AI calling the shots

Paul: Another one is reporting and reporting tools. For example, we here at Intercom have spent the best part of a decade building deep reporting – editing reports, creating reports, all the kinds of typical things from a crude point of view like create-

Des: Create a new portfolio, update a change a filter, categorize it-

Paul: And the more we build and the more research we do with customers, the more we learn there’s more to build.

Des: It’s a neverending story.

Paul: More configurability, more customization, et cetera. Now, though, you realize AI could probably do a lot of that, and there’s no need to build all of these things or use them if they’ve been built already, and we find ourselves in a position where we’re still building reporting features, but are also wondering, “Should we also be building the need for our customers to never use them?” And instead, have some kind of field where they type in the question, like, “Is LTV up or down?” “Is my customer support volume gone down?” “What was the busiest day this week?” It’s all chat-based UI. AI will clearly be good at that. I think it will do things like uncover correlations in data that humans never would purely because there’s so much data.

“A lot of people are only comfortable with AI as a house pet … We have to get more comfortable with AI as a peer”

Des: And it’s so much more powerful than any one person.

Paul: Yeah, exactly. And it can just do so much more. Before, I said to you that I think the role of humans might be less about digging through the data and the analysis and much more about judgment. Usually, it’s doing the analysis, applying human judgment, then making decisions. And I think humans will move away from the analysis part. AI will do that, and they’ll apply the judgment to make the decisions. But you said, and I agree, that AI will make the judgment, too. Can you explain that a bit?

Des: Yeah, sure. I’ll get this wrong, but there’s an educational psychologist called Benjamin Bloom who was trying to describe how you get to know an area of any sort, and he has this thing called “Bloom’s Taxonomy of Educational Objectives“. And at the very, very, very low end is recall. The “can you list to 26 counties of Ireland” type of thing. There’s no depth to that. And at the very, very, very high end is synthesis, “Can you create new stuff based on existing stuff?”

So, it goes something like recall, recognition, comprehension, analysis, and synthesis. I’m skipping one or two there, and we’ll put a better diagram in the show notes. I think a lot of people are only comfortable with AI as a house pet. They like it at the low end. It’s cool in the same way people are cool with typo correction. But we have to get more comfortable with AI as a peer, in a sense. I think AI will be able to apply judgment because even if you take our own bot, Fin, a lot of what Fin does is “given this, answer that.”

“It’s not clear to me where the AI stops in its capability. What is clear is that there’s a human comfort level in terms of, ‘You can go that far, but I need to be the person who fixes this'”

Rewind.ai is a customer of Fin. I’m a Rewind user. It’s an awesome product. Rewind does this thing where it wants to record every meeting, and I didn’t want to do that. So, I was trying to disable this pop-up and went to Rewind’s help. I said, “How do I disable the pop-up?” And Fin said, “Oh, here’s how you do it.” And it linked up an article that never directly said, “To disable this pop-up, here’s how you do it.” What the article said was something along the lines of, “If you want to turn on this feature, you go here to do it.” By the way, when you do it, it won’t be always on. It’s going to pop up every time. And Fin inferred, having read that article, that if that’s the thing and that’s the preference for it, it must be on this screen. And it basically gave me a perfect answer. And I’m using this not to promote Fin, but it’s just an example of the deduction or judgment and suggestion. It was confident enough to tell me that was the answer. It’s a simple example where no one in Rewind had to actually write that answer out. Fin worked it out.

In the case of reporting, imagine we ask, “Show me which CS reps get the highest scores,” which is a pretty simple ask. Then you could say, “Show me what topics correlate with the highest scores,” which is probably pretty simple, and then you could say, “Show me which CS reps tend to perform the lowest on which topics,” and maybe that could be where you have better training courses, and then you could say, “Prioritize that list and suggest the type of training they should do,” and, “Mail those people and tell them to go on that training.” All of that is judgment in a sense. It’s not clear to me where the AI stops in its capability. What is clear is that there’s a human comfort level in terms of, “You can go that far, but I need to be the person who fixes this.” Do you know the old Dilbert cartoon of the pointy-haired boss who likes to feel important, so he wants to be the person who presses the launch button? A lot of our first pass attempts at using AI will be like that. They’ll be like, “Well, hang on a second. All that low-level shit can go away, but I still need to be here for the important stuff.”

“What you can imagine might happen is all the work up to the last step of the marathon might be done by AI, and then a human comes in and goes, ‘Yep, click'”

There’s some dark, futuristic cartoon where there are a load of humans on a factory floor, they’re all there to do certain things, and there’s a button on a switch they can click in case anything’s ever gone wrong. And then, on the other side of the wall, those things aren’t wired up to anything. It’s just there to make the humans feel important. We give them a sense that they’re part of this process as well. I think we’re going to see that bar creep up and up and up, especially given that the reality is it tends to be pretty right, it tends to be quite accessible and probably works 365, 24/7. I think you’re going to see what people define as judgment creep up and up and up.

The stuff where it gets more funky is AI is not perfect. Neither are humans, but AI is not perfect. And there are some decisions where you’re like, “Right, let’s not launch the email campaign without a human eyeballing it.” Totally valid. So, what you can imagine might happen is all the work up to the last step of the marathon might be done by AI, and then a human comes in and goes, “Yep, click.” That makes sense. That’s just logical.

Paul: We’re talking about analysis to synthesis, and there’s judgment and making decisions. And humans, for sure, will feel the need to control it and hit the red button. And so the decision-making of, “Do we or don’t we hit the red button,” is left to us. How far away do you think we are from really great software tools that are excellent at judgment and pushing us to go, “Maybe they should make the decision.”

Des: Do you know the RBAC features we’ve built in Intercom, role-based access controls? I think it’s going to be like that. I think we’re going to be building preference dialogues into Intercom and other tools where it basically says you’ll have a lot of settings that begin with, “Allow the AI to…” You could imagine allowing AI to reply or request CSAT scores, allowing AI to ping my own support team when CSAT scores are dropping… All the way up to slightly bigger things like allowing AI to post a job opening on Indeed.com because we’re clearly understaffed. There’s a spectrum. What are the things humans would do there, and what type of workflow, almost like an “if this, then that,” do you play out? That’s basically how I think we’re going to end up.

“When people tell me we’re never going to do X with AI, I’m like, ‘Mate, I’ve done this rodeo many times, and I’m telling you, you probably will'”

How long before we see this? I think there won’t be some watershed moment where it’s like, “It’s here.” What might happen is we sit down next year, and the next conversation we have might be whether the AI should be sending suggested next steps. We’re past discussing correlation. That ship has sailed. I think this conversation would be the continuous incremental creep of what we believe to be possible and what we’re comfortable with.

Paul: Yeah, that makes sense to me, too. History is the best predictor of the future in a lot of these cases. It’s a similar pattern with things like the first iPhone, which was very, very basic, and then, with every release, it was slowly maturing-

Des: You’re totally right. When I was a Web 2 consultant, our discussion at the time was like, “You’ll never do X in the cloud.” “You’ll never have a word processor in the cloud. You’ll never have a video editing tool in the cloud.” And now you can play Counter-Strike in the cloud. Literally full-on, proper desktop gaming in the cloud, and it’s all done through your browser. And similarly, “You’ll never do X on a phone. Yeah, phone’s good and all that, but you’re not really going to…” Whatever the thing is, you’ve done it. Applying for a mortgage, buying a car. It turns out you do all of these things. So, when people tell me we’re never going to do X with AI, I’m like, “Mate, I’ve done this rodeo many times, and I’m telling you, you probably will.”

Jobs don’t change, technologies do

Paul: There are a couple of practical questions I know you’ve used a lot to talk to our team and our product org to get them to think about how quick this might happen to them and their industry. How can this AI technology be applied to create new features? How can they be applied to make existing features easier, better, and more powerful? Do you want to talk us through that?

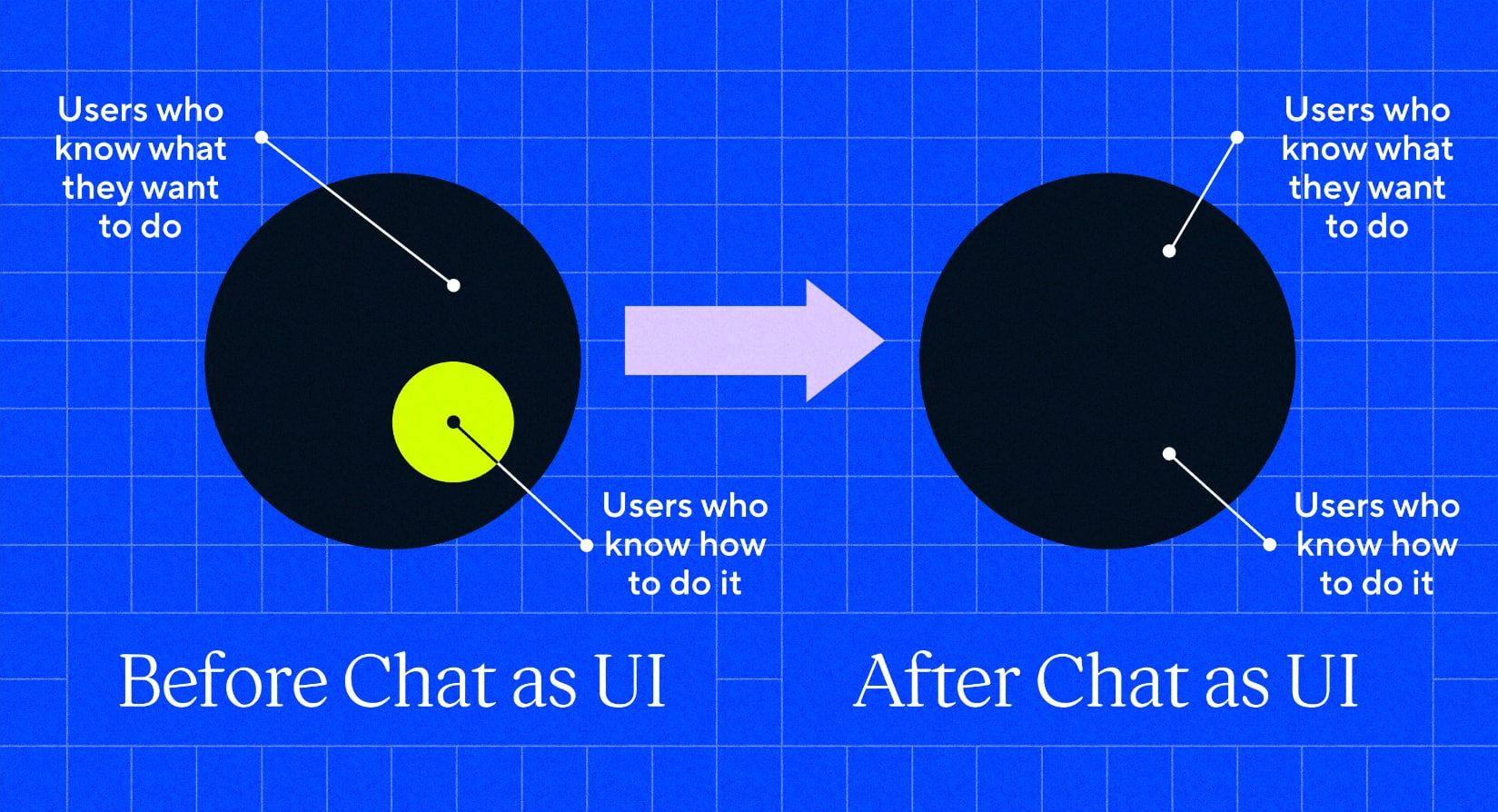

Des: The core point I always come back to with all new capabilities, whether it’s AI or chatbots or messaging is, what is a product? A product is usually a platform of features that let a user get a certain job or a certain set of jobs done. The questions you ask yourself as a product manager or product leader are, “Given the technologies available, what is the best way our users can get this done right?” It’s the Jobs-to-be-Done idea, which is fixated on this: jobs don’t change, technologies change. The solutions change, but the job is the same.

“Tools for narrow markets that require specialism become tools for general markets”

Generally, with these things, you’re trying to make it so that more people can do the job. A great example of that is Equals, the spreadsheet company. Let’s say I don’t know Excel functions, but I do know what I want out of them. I want to see the average growth rate of this startup over the last six months if you exclude organic traffic. I don’t know how to do that, but I can write it into a box, Equals will work out what I mean, and it’ll write up the formula for me. I don’t know if the formula is right, but it seems to be most of the time. Or if it’s wrong, it’s so egregiously wrong that it’s not a problem because I can correct it. That’s a great example where it’s made it possible for more people to do the thing.

If your tool involves either arcane languages, complex query stuff, or creativity, as in, “I know I wanted to have a fancy black image, but I don’t know how to design this. I’m not a designer,” or, “We want to let all of our English-speaking support staff be able to support all languages in Europe,” AI can probably help. Can AI increase the amount of people who can do the job? Usually, that has a massive impact on your market size. It means more people can use your tool. More people can use Equals than Excel.

Paul: Well, tools for narrow markets that require specialism become tools for general markets.

Des: Yeah, because you change one core thing – the amount of people who know what they want to do and the amount of people who can do it are now the same thing. That’s huge. AI and all of this technology make it so that more people can use your product, ultimately. Chat UI is a huge part of that.

Another one is helping people increase the power of their work. The analogy here would be like a crane. If I jump into a crane, I am now much stronger than before. I can move stuff at a far greater rate. It’s still me doing the work, but now I’m lifting heavier stuff than I was capable of. Similarly, if a human can summarize one conversation at a time, can AI summarize one million conversations at a time? You mentioned looking at correlation across all data sets, and a human can do that one by one. AI does not need to act one by one. By increasing the capability of the human, the scope of their impact is far greater.

“What are new things that people can do? What are the things that are the 10x of human capability? What are the things where you can remove entire chunks of work?”

Paul: The crane is a great example. You’re saying one guy gets in the crane and lifts the volume of things 80 people would have had to do manually. What are the things that lots of people are required to do where AI could make it so that one person overseeing it can do it or it can do it by itself?

Des: Absolutely. For example, Fin Snippets in Intercom is when one person answers a question properly, Fin will say, “Hey, is that the right answer? Because if it is, I’ll take it from here.” And that’s one person effectively doing the work of all future people for the future. It is a type of crane.

And then, the third category you have to look out for is, nearly ironically, the one people tend to overlook. There are things we can get rid of entirely. It’s not even a dude in the crane anymore – we’ve taken away the need for that in its entirety.

If you recall, say, the advertising example I talked about earlier, where Johnny logs in every day to look at all the various charts and tables, there’s definitely an argument where you just don’t need that done at all. You just assume, from this point onwards, in the same way you assume that electricity works in your building, you assume that the ads are optimized. Or if they’re not optimized, they’re getting optimized, and there’s nothing you need to do about it.

So yeah, to zoom back:

- What are the new capabilities?

- What are new things that people can do?

- What are the things that are the 10x of human capability?

- What are the things where you can expand the addressable market?

- And then, lastly, what are the things where you can remove entire chunks of work?

That’s generally how I think you should be thinking about this. This is why I’m not an AI skeptic. I see too many opportunities.

Even in a pretty prescribed domain like customer support, it’s just so clear all of the ways in which we could use 10 times the amount of AI and ML people to go after all the many opportunities in the space. Every time I get pinged by, “We’re doing AI for a customer support” type startup, I am quite frustrated, because I’m like, that’s a brilliant idea. We either have or haven’t thought of it, but there’s so many brilliant ideas. That’s just in one little domain.

Paul: Yeah. That’s really good practical advice. We’ve talked a lot today about how startups should think about entering categories and how AI can disrupt that category or not. On the incumbent side, I worry more about those companies because I’m subject to this myself, at times, where I’m like, “Hang on a minute. We’re domain experts. We’ve been here 10 years doing this. There’s no possible way AI could ever know the things we know.”

“It’s a good time to reread The Innovator’s Dilemma and remind yourself of the true nature of disruption”

Des: Totally.

Paul: Right? Nonsense. Of course it can, and it will. And the older you get, the stronger the feeling gets. Any last pressing advice for startups, incumbents, or even investors?

Des: It’s a good time to reread The Innovator’s Dilemma and remind yourself of the true nature of disruption. It has to be a new attack vector that the incumbent businesses can’t easily take. And I think a lot of people are going to say that they’re going to disrupt industries with AI. If you’re ever tempted to say those words at all, do yourself a favor and read even one of the six-pager Harvard Business Review papers on it. Refresh on exactly what it means to be disruptive, whether it’s low-end disruptive, the new use-case disruptive, or new market disruptive. Just make sure you know what you’re saying.

I think a lot of businesses will build a really cool piece of product, but it’ll ultimately end up being unpaid R&D for the much bigger company because they’re going to look down and go, “That’s clearly the right thing. We should do that.” And that will be it. You might have a cool new way of doing some specific task in accounting, surveys, time tracking, expense tracking, or whatever. You might have a cool little feature dripping in AI, and it might even be get Product Hunt feature of the day. You might have a sexy landing page. I might even tweet about it and say, “Check out this dope shit.” It could be stunning.

The question is, is it enough of an attack angle to be truly disruptive? Or will some principal engineer or designer sit down in Mega Big Corp and be like, “We should probably copy that”? It might take them a year, but in that year, you’re unlikely to have built a fully mature platform. That’s the challenge, and maybe that’s okay. Maybe you’re okay being like, “Hey, we’re going to go after the low end of the market. We don’t actually have to compete with the Megacorp.” That’s fine, but just make sure you’re making all those decisions together and don’t just be like, “We’re going to kill Salesforce because we have an AI-based lead scoring algorithm,” or something like that. Salesforce is going to work on that.

Paul: That’s great. Let’s leave it there for today, and I’ll see you maybe in 12 months so we can figure out what’s next.